Before running A/B tests. Find out how much traffic to conversion rate is on the target website.

Look, I get this question every week from clients at BrillMark. “How many tests should we be running?”. Honestly, most people asking this are thinking about it wrong.

After 5 years of running CRO for brands doing anywhere from $50K to $50M annually. Here’s what actually matters.

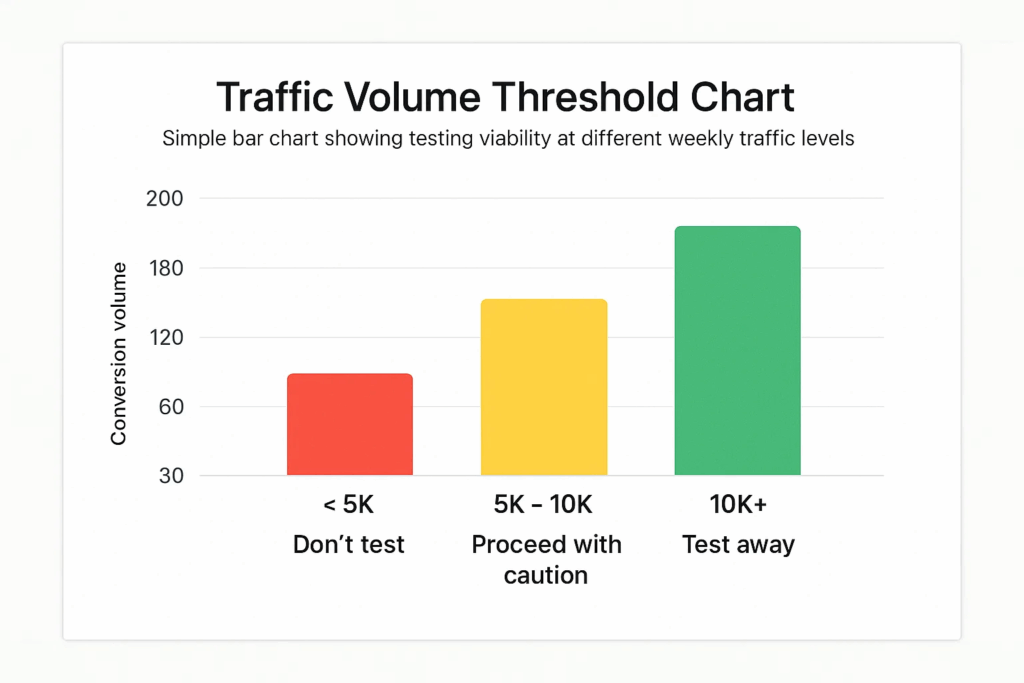

The Traffic Reality Check for A/B Tests

First thing – if you’re not hitting 10K weekly visitors, stop reading conversion optimization blogs. Start reading marketing ones. You need traffic before you need tests.

I’ve seen too many companies with 2K monthly visitors trying to run sophisticated testing programs. It doesn’t work. Your test will run for 6 months before you get statistical significance.

Assuming you even get enough conversions to matter.

The 10K weekly threshold isn’t arbitrary. Have a decent conversion rate (2-5%). This gives you enough conversion volume to run meaningful tests in 2-4 weeks. Below this threshold, you’re wasting time and money.

Conversion Volume Trumps Conversion Rate

Here’s where people mess up. They think a high conversion rate = ready to test. Wrong.

I had a client with a 12% conversion rate but only 500 weekly visitors. That’s 60 conversions per week. Even with that high rate. Their tests took forever because 60 conversions split between control and variant isn’t enough data.

Compare that to a client with 2% conversion rate but 15K weekly visitors. That’s 300 conversions per week. Guess which one runs more successful tests?

You need conversion volume, not just conversion rate.

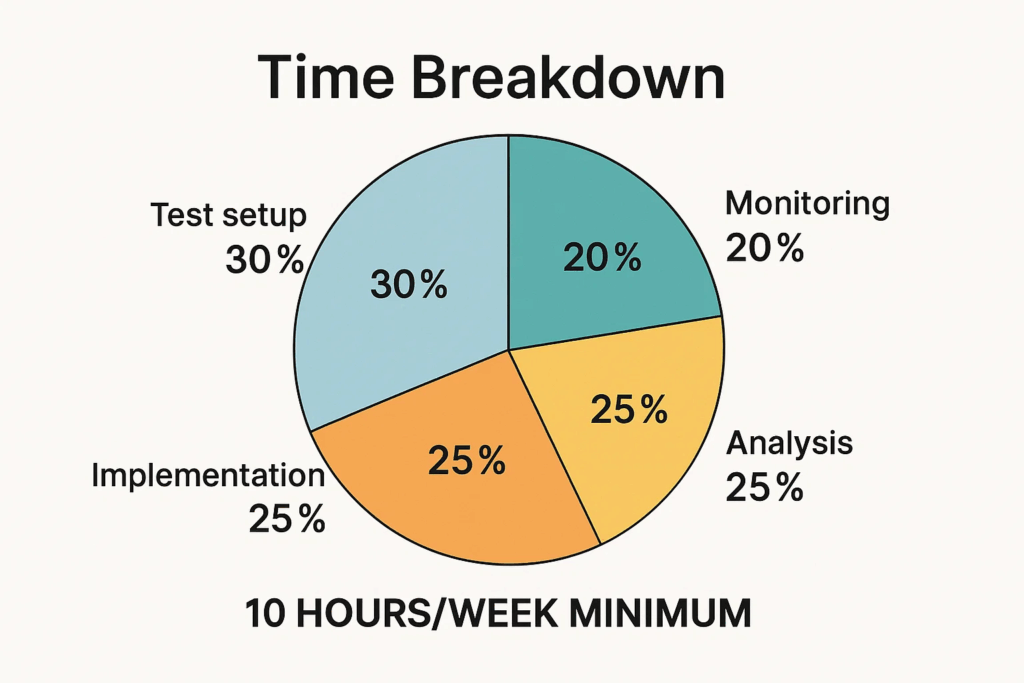

The Resource Question Nobody Talks About

Testing isn’t just about traffic and conversions. It’s about having someone who knows what they’re doing.

Most companies think they can assign A/B testing to their marketing coordinator. Who’s already managing 15 other things. Then they wonder why their tests are inconclusive or, worse, implemented wrong.

Real talk: if you can’t dedicate at least 10 hours per week to testing. (between setup, monitoring, and analysis) Don’t bother.

When NOT to A/B Test

Stop testing if:

- You have fewer than 1000 conversions per month

- Your site is broken (fix UX issues first)

- You’re in the middle of a rebrand or major site overhaul

- You don’t have anyone who understands statistical significance

- You’re testing random stuff because you read about it in a blog

I’ve turned away clients who wanted to test button colors when their checkout process had a 70% abandonment rate. Fix the obvious problems first.

The Actual Numbers (Based on Real Client Data)

Here’s what works based on our client portfolio:

For sites with 10K-50K weekly visitors:

- 2-3 tests per month maximum

- Run tests for a minimum of 2 weeks, regardless of early results

- Focus on high-impact areas (checkout, pricing pages, key landing pages)

For sites with 50K-200K weekly visitors:

- 3-5 tests per month

- Can run simultaneous tests on different page types

- Start testing smaller elements after big wins are captured

For sites with 200K+ weekly visitors:

- 5-10 tests per month

- Multiple simultaneous tests across different conversion funnels

- Can test incremental improvements

These numbers assume you have a proper testing infrastructure and someone who knows how to analyze results.

Factors That Actually Influence A/B Testing Volume

Traffic Distribution

If 80% of your traffic hits your homepage but you want to test your pricing page, you’ll need different volumes for each test. Plan accordingly.

Business Cycles

E-commerce brands shouldn’t start tests right before Black Friday. B2B companies need to account for decision-making timelines that stretch across quarters.

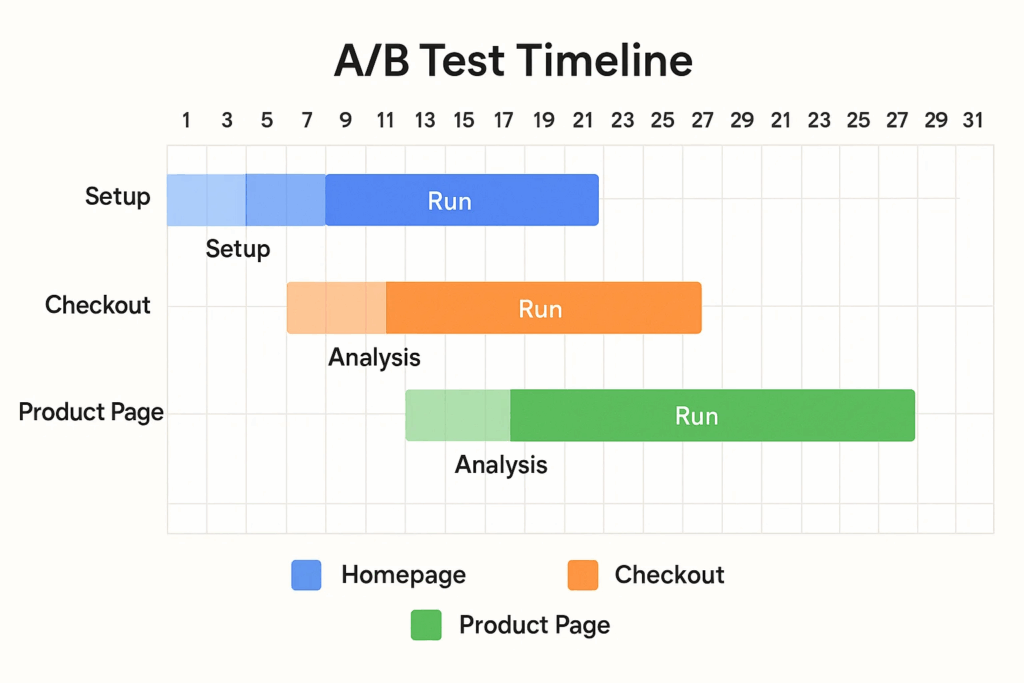

Test Complexity

Changing a headline = a simple test that can run alongside others. Redesigning your entire checkout flow = a complex test that needs isolation and longer runtime.

Team Bandwidth

Testing isn’t set-it-and-forget-it. Each test needs setup, monitoring, analysis, and implementation. Most teams can handle 3-5 active tests without quality suffering.

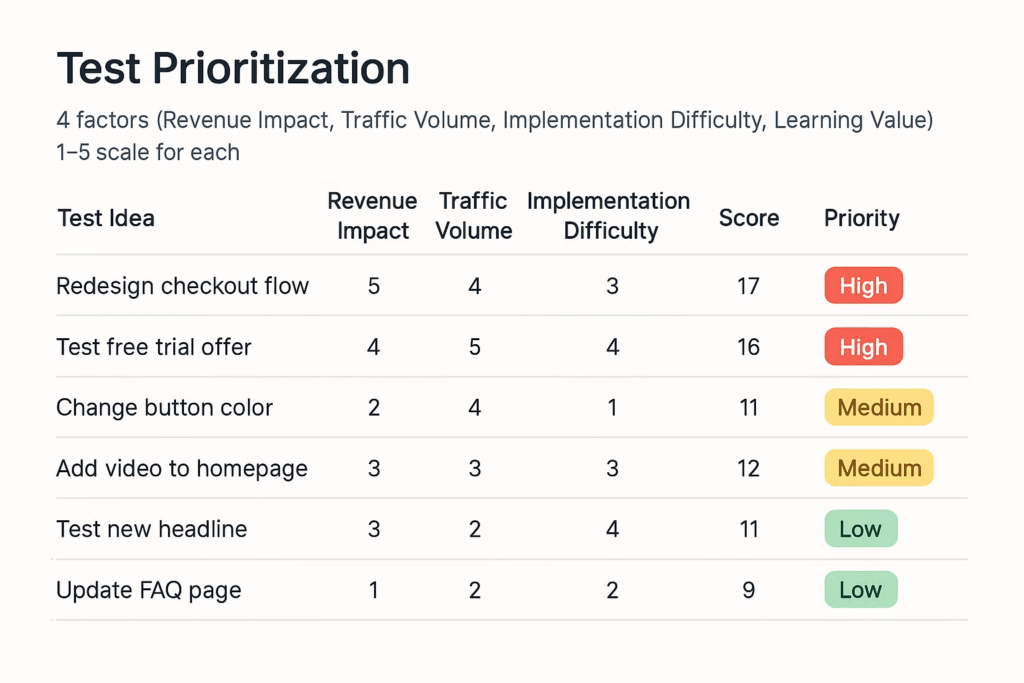

How to Prioritize Tests for Maximum Impact

Forget fancy prioritization frameworks. Use this simple approach:

- Revenue impact potential – What’s the maximum upside if this test wins?

- Traffic volume – Will this test get enough exposure to matter?

- Implementation difficulty – Can you actually execute this properly?

- Learning value – Will this teach you something useful regardless of the outcome?

Rank each potential test 1-5 on these factors. Test the highest scores first.

The A/B Test Development Agency Reality

At BrillMark, we typically run 3-4 A/B tests per month for most clients. Not because that’s the magical number, but because that’s what most businesses can handle while maintaining quality.

I’ve seen agencies promise 10+ tests per month to win business, then deliver low-quality tests that don’t move the needle. Quantity without quality is worse than not testing at all.

How Much Traffic Is Actually Required to Run A/B Tests

The real answer depends on your current conversion rate and the effect size you’re trying to detect.

Minimum viable testing traffic:

- 1000 visitors per week to each test variation

- At least 100 conversions per variation during the test

- Minimum 2-week test duration (preferably 4 weeks)

Comfortable testing traffic:

- 2000+ visitors per week to each variation

- 200+ conversions per variation

- Can detect 10-20% improvements reliably

Use a sample size calculator. Understand that most tests need more traffic than the calculator suggests. Because real-world conditions aren’t as clean as statistical models.

Bottom Line

Most companies should run 2-4 A/B tests per month. If they have the traffic and resources to do it properly.

If you’re trying to run more tests than that, you’re probably either:

- Running low-quality tests

- Not analyzing results properly

- Testing things that don’t matter

- Spreading your team too thin

The goal isn’t to run as many tests as possible. It’s to run enough good tests to keep learning. Focus on improving your conversion rate at a sustainable pace.

Start with one test per month executed properly. Get comfortable with the process. Then scale up as your traffic and team capacity allow.

Quality over quantity. Always.

We have been running A/B tests for Companies for the last 10+ years. With our experience, we have compiled a list. Do Give it a Read

E-commerce A/B Test Ideas: After 200+ Experiments in 2025

About BrillMark

We’re a development agency. That helps top CRO agencies scale their operations. Get more tests live, faster. Over a decade of experience and the trust of our huge clientele. We’ve served as the dedicated development arm for partners like GrowthHit and FunnelEnvy. We’ve executed successful tests for major brands like Micro Center and Burga.

Our team is proficient in all the platforms. From Shopify and BigCommerce to WordPress and AEM. As well as the major CRO tools like VWO, Optimizely, and Convert. We handle the entire development lifecycle. From initial test setup to final deployment and QA.

Allowing your team to focus on strategy and analysis.

Think of us as a seamless extension of your team. Providing the full-stack capability you need to take on more clients and bigger projects.

We’d love to learn more about you. How you operate and explore a potential partnership. Are you available for a brief conversation sometime next week?

Sources: