How Google made $200 million from testing blue colors, and what your business can learn from the world’s most successful conversion optimization experiments

What is A/B Testing?

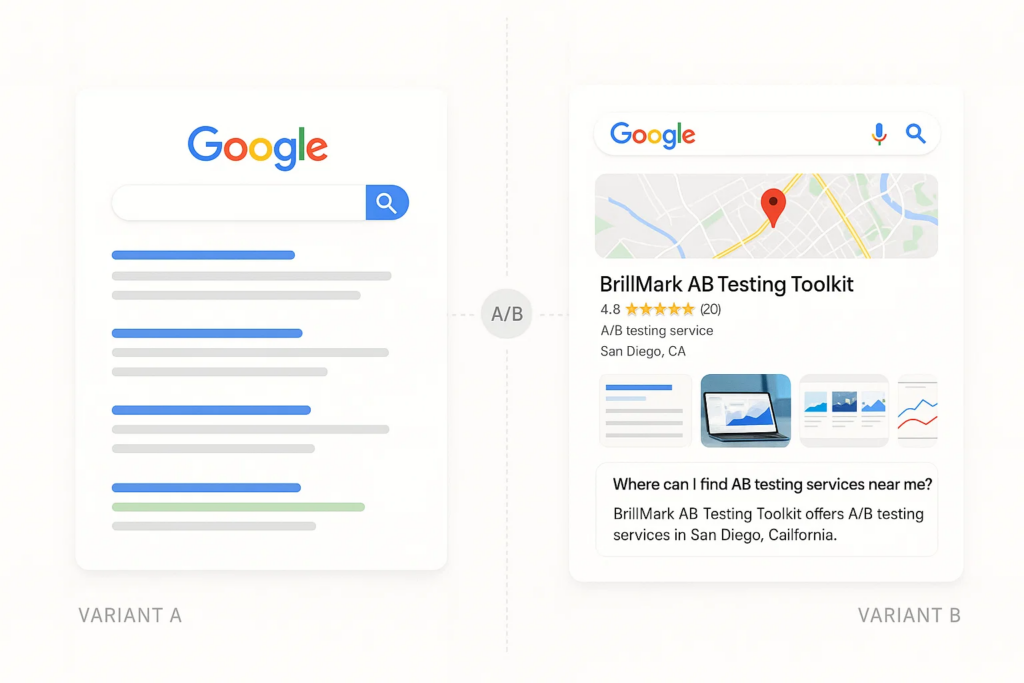

A/B testing, or split testing or conversion rate optimization (CRO), is a scientific method of comparing two versions of a webpage, email, or app to determine which performs better. By randomly showing version A to one group of users and version B to another group, businesses can make data-driven decisions instead of relying on guesswork.

The A/B Testing Process

The A/B testing methodology follows a simple but powerful framework:

- Hypothesis Formation: Identify what you want to improve and why

- Variable Selection: Choose one element to test (headline, button, image, etc.)

- Test Creation: Develop version A (control) and version B (variant)

- Traffic Split: Randomly divide your audience between both versions

- Data Collection: Measure key performance indicators (KPIs)

- Statistical Analysis: Determine which version performs significantly better

- Implementation: Roll out the winning version to all users

Key A/B Testing Terminology

- Control Group: Users who see the original version (A)

- Treatment Group: Users who see the modified version (B)

- Conversion Rate: Percentage of users who complete the desired action

- Statistical Significance: Confidence that results aren’t due to random chance

- Confidence Level: Typically 95%, meaning you’re 95% sure the results are real

- Sample Size: Number of visitors needed for reliable results

Why A/B Testing Matters for Your Business

The Cost of Poor Conversion Rates

Most websites convert poorly. The average ecommerce conversion rate is just 2.86%, meaning 97+ visitors leave without buying. Even small improvements can have massive impacts:

- 10% conversion improvement on a $1M revenue site = $100,000 additional annual revenue

- 20% email open rate increase for 100,000 subscribers = 20,000 more engaged customers

- 5% reduction in cart abandonment = significant revenue recovery

Learn more about improving your conversion rates and reducing cart abandonment.

Benefits of A/B Testing for Business Growth

1. Increased Revenue and ROI

Companies using A/B testing software see average revenue increases of 15-25% annually. By optimizing existing traffic instead of buying more, you maximize return on advertising spend (ROAS).

2. Improved User Experience

Website optimization through A/B testing creates better user experiences. When forms are easier to complete, pages load faster, and navigation is clearer, customers are happier and more likely to convert.

3. Risk Reduction

Before making major website changes, split testing lets you validate ideas on a small percentage of traffic. This prevents costly mistakes that could hurt conversion rates.

4. Competitive Advantage

While competitors guess what works, data-driven companies know what works. This creates a compounding advantage over time.

5. Better Marketing Performance

Email A/B testing, landing page optimization, and digital marketing campaigns all benefit from systematic testing approaches.

Famous A/B Testing Case Studies That Generated Millions

Google’s $200 Million Blue Link Experiment

Google’s most famous A/B test involved testing 41 different shades of blue for search result links. The winning shade increased click-through rates enough to generate an estimated $200 million in additional annual revenue.

Key Takeaway: Even tiny changes can have massive financial impacts at scale.

Netflix’s Personalized Movie Posters

Netflix runs thousands of multivariate tests annually, including showing different movie posters to different users for the same content. Their algorithm determines whether to show you an action-oriented poster or a romance-focused poster based on your viewing history.

Results:

- 20-30% increase in content engagement

- Reduced bounce rates on content pages

- Higher user satisfaction scores

Key Takeaway: Personalization through testing dramatically improves user engagement.

Latest: Sequential A/B Testing Keeps the World Streaming Netflix Part 1: Continuous Data

Amazon’s “Continue Shopping” vs “Proceed to Checkout”

Amazon tested different button text on their cart page:

- Version A: “Continue Shopping”

- Version B: “Proceed to Checkout”

Results: “Proceed to Checkout” increased conversions by 8.2%, worth millions in additional revenue.

Key Takeaway: Button copy and call-to-action optimization significantly impact conversion rates.

Spotify’s Premium Upgrade Tests

Spotify continuously tests its premium upgrade flow:

Test Elements:

- Free trial length (30 days vs 60 days vs 90 days)

- Upgrade button colors and placement

- Pricing presentation

- Social proof elements

Results: Their optimized upgrade flow increased premium conversions by 27%.

HubSpot’s Lead Generation Form Optimization

HubSpot tested form length and field requirements:

Original Form: 7 fields required Test Variation: 3 fields required

Results:

- 120% increase in form completions

- Only 15% decrease in lead quality

- Net positive ROI from increased volume

Check out our guide on lead generation optimization for more form testing strategies.

How to Set Up Your First A/B Test: Step-by-Step Guide

Step 1: Identify Testing Opportunities

Start with high-impact, low-effort tests:

High-Priority Elements to Test:

- Headlines and value propositions

- Call-to-action buttons (text, color, placement)

- Product images and descriptions

- Pricing presentation

- Form length and fields

- Navigation and menu structure

Pages to Test First:

- Landing pages (highest traffic)

- Product pages (direct revenue impact)

- Checkout process (reduce abandonment)

- Email signup forms (build audience)

Step 2: Formulate a Hypothesis

A strong A/B testing hypothesis includes:

- Current situation: “Our current signup button has a 3% conversion rate.”

- Proposed change: “Changing the button color from blue to orange”

- Expected outcome: “Will increase conversions by 15%”

- Reasoning: “Because orange creates more urgency and stands out better.”

Step 3: Determine Sample Size

Use an A/B testing calculator to determine the required sample size based on:

- Current conversion rate

- Minimum detectable effect (how big a change you want to detect)

- Statistical power (typically 80%)

- Significance level (typically 95%)

Example: To detect a 10% improvement on a 5% conversion rate with 95% confidence, you need approximately 3,800 visitors per variation. Use tools like Optimizely’s sample size calculator or Evan Miller’s calculator to determine your specific requirements.

Step 4: Choose Your Testing Method

Simple A/B Testing

- Compare two versions

- Test one element at a time

- Easiest to analyze results

Multivariate Testing

- Test multiple elements simultaneously

- Requires larger sample sizes

- Shows interaction effects between elements

Multi-Armed Bandit Testing

- Automatically allocates more traffic to winning variations

- Reduces opportunity cost during testing

- More complex to set up

Step 5: Set Up Tracking and Analytics

Essential A/B testing metrics to track:

Primary Metrics:

- Conversion rate: Main goal completion

- Revenue per visitor: For e-commerce sites

- Click-through rate: For email and ads

- Engagement time: For content sites

Secondary Metrics:

- Bounce rate: Overall page quality

- Pages per session: User engagement

- Cart abandonment rate: E-commerce funnel health

- Customer lifetime value: Long-term impact

Step 6: Run the Test

Best Practices:

- Run tests for full business cycles (including weekends)

- Minimum 1-2 weeks for statistical validity

- Don’t stop tests early, even if one version appears to be winning

- Monitor for external factors (holidays, marketing campaigns, technical issues)

Step 7: Analyze Results

Statistical Significance:

- Use proper statistical tests (Chi-square, t-test)

- Achieve 95% confidence level minimum

- Consider practical significance vs statistical significance

Segment Analysis:

- Mobile vs desktop performance

- New vs returning visitors

- Traffic source differences

- Geographic variations

A/B Testing Tools and Platforms {#tools-and-platforms}

Enterprise A/B Testing Software

1. Optimizely

- Best for: Large enterprises

- Pricing: $50,000+ annually

- Features: Advanced targeting, multivariate testing, and personalization

- Pros: Robust analytics, easy-to-use visual editor

- Cons: Expensive, complex setup

2. VWO (Visual Website Optimizer)

- Best for: Mid-size businesses

- Pricing: $199-$999/month

- Features: Heatmaps, session recordings, form analytics

- Pros: All-in-one CRO platform

- Cons: Learning curve for advanced features

3. Adobe Target

- Best for: Enterprise with Adobe ecosystem

- Pricing: Custom enterprise pricing

- Features: AI-powered personalization, real-time decision-making

- Pros: Deep integration with Adobe products

- Cons: Very expensive, requires technical expertise

Mid-Market A/B Testing Solutions

4. Unbounce

- Best for: Landing page optimization

- Pricing: $99-$625/month

- Features: Drag-and-drop builder, conversion tracking

- Pros: Easy landing page creation and testing

- Cons: Limited to landing pages

5. Convert.com

- Best for: Privacy-focused testing

- Pricing: $699-$2,499/month

- Features: GDPR compliance, advanced targeting

- Pros: Strong privacy features, good customer support

- Cons: Higher pricing than competitors

Free and Budget A/B Testing Tools

6. Google Optimize (Discontinued 2023)

- Status: No longer available

- Alternative: Google Analytics 4 Experiments (limited)

7. Microsoft Clarity + Custom Solutions

- Best for: Budget-conscious businesses

- Pricing: Free

- Features: Heatmaps, session recordings

- Pros: Completely free, integrates with analytics

- Cons: Requires technical setup for A/B testing

8. Mailchimp (Email A/B Testing)

- Best for: Email marketing optimization

- Pricing: $10-$300/month

- Features: Subject line testing, send time optimization

- Pros: Built into the email platform

- Cons: Limited to email testing

Learn more about email marketing tools in our comprehensive guide.

Specialized A/B Testing Tools

9. Hotjar

- Best for: Understanding user behavior

- Pricing: $32-$171/month

- Features: Heatmaps, user recordings, surveys

- Pros: Excellent for hypothesis generation

- Cons: Limited A/B testing capabilities

10. Crazy Egg

- Best for: Visual optimization insights

- Pricing: $29-$249/month

- Features: Click tracking, scroll maps

- Pros: Easy setup, clear visualizations

- Cons: Basic A/B testing features

For a complete comparison of conversion optimization tools, check out our detailed review.

Common A/B Testing Mistakes to Avoid

1. Testing Too Many Elements Simultaneously

Mistake: Changing headlines, buttons, images, and layout all at once.

Problem: Can’t identify which change caused the improvement.

Solution: Test one element at a time or use proper multivariate testing methodology

2. Insufficient Sample Size

Mistake: Concluding tests with only 100-500 visitors

Problem: Results aren’t statistically reliable

Solution: Use sample size calculators and wait for adequate traffic

3. Stopping Tests Too Early

Mistake: Ending tests after 24-48 hours when one version is “winning”

Problem: Natural variation can create false positives

Solution: Run tests for minimum 1-2 weeks and reach statistical significance

4. Ignoring Statistical Significance

Mistake: Implementing changes based on small differences (51% vs 49%)

Problem: Differences might be due to random chance

Solution: Require 95% confidence level minimum

5. Testing Low-Traffic Pages

Mistake: Running A/B tests on pages with <1,000 monthly visitors

Problem: Will take months or years to get reliable results

Solution: Focus on high-traffic pages or use user testing for low-traffic pages

6. Not Considering External Factors

Mistake: Running tests during Black Friday, product launches, or marketing campaigns

Problem: External factors can skew results

Solution: Account for seasonal trends and marketing activities

7. Testing Insignificant Changes

Mistake: Testing tiny font size changes or minor color variations

Problem: Unlikely to produce measurable improvements

Solution: Focus on elements that significantly impact user decisions

8. Implementing Losing Variations

Mistake: Going with personal preference despite test results

Problem: Ignores data-driven insights

Solution: Always implement the statistically significant winner

Advanced A/B Testing Strategies

1. Sequential Testing and Test Velocity

Run continuous testing programs instead of one-off experiments:

Monthly Testing Calendar:

- Week 1-2: Landing page headline tests

- Week 3-4: Product page optimization

- Ongoing: Email subject line testing

Test Velocity Optimization:

- Prioritize high-impact tests using ICE framework (Impact, Confidence, Ease)

- Run parallel tests on different page sections

- Use shorter test durations for high-traffic elements

2. Segmented A/B Testing

Different user segments often respond differently to changes:

Common Segmentation Strategies:

- Device type: Mobile vs desktop optimization

- Traffic source: Organic vs paid vs social media

- User behavior: New vs returning visitors

- Geographic location: Different regions, cultures

- Customer value: High-value vs low-value customers

Example: E-commerce Button Testing

- Mobile users: Prefer larger, thumb-friendly buttons

- Desktop users: Respond better to traditional button sizes

- Result: 15% overall improvement through segmented approach

3. Personalization Through A/B Testing

Use testing to create personalized experiences:

Dynamic Content Testing:

- Industry-specific landing pages for B2B visitors

- Product recommendations based on browsing history

- Geolocation-based offers and messaging

- Time-sensitive promotions based on user timezone

Behavioral Trigger Testing:

- Exit-intent popups with different offers

- Scroll-based content reveals

- Time-on-page triggers for engagement

4. Cross-Platform A/B Testing

Test consistency across multiple touchpoints:

Omnichannel Testing Strategy:

- Email to landing page consistency

- Ad creative to landing page alignment

- Mobile app to website experience continuity

- Social media to conversion funnel optimization

5. Long-Term Impact Testing

Measure beyond immediate conversions:

Extended Metrics:

- Customer lifetime value changes

- Retention rate improvements

- Brand perception shifts

- Customer satisfaction scores

Cohort Analysis:

- Track user behavior over 30, 60, 90 days

- Measure repeat purchase rates

- Analyze subscription renewals

- Monitor referral generation

The Future of A/B Testing in 2025 and Beyond

1. AI-Powered Testing Platforms

Machine learning is revolutionizing conversion rate optimization:

Automated Hypothesis Generation:

- AI analyzes user behavior to suggest test ideas

- Predictive analytics identifies high-impact opportunities

- Natural language processing optimizes copy automatically

Dynamic Traffic Allocation:

- Multi-armed bandit algorithms automatically send more traffic to winning variations

- Real-time optimization adjusts tests based on performance

- Seasonal adjustment accounts for time-based patterns

2. Privacy-First Testing

With GDPR, CCPA, and a cookieless future:

Server-Side Testing:

- Tests run on your servers instead of user browsers

- Better performance and privacy compliance

- More reliable data collection

First-Party Data Focus:

- Customer data platforms enable better segmentation

- Zero-party data from surveys and preferences

- Consensual data collection builds trust

3. Voice and AI Interface Testing

As interfaces evolve beyond traditional websites:

Voice Commerce Optimization:

- Testing voice search responses

- Conversational interface optimization

- Smart speaker experience testing

AI Chatbot Testing:

- Response effectiveness testing

- Conversation flow optimization

- Personalization algorithm testing

4. Micro-Moment Testing

Testing for mobile-first, instant-gratification experiences:

Speed-Focused Testing:

- Page load time optimization

- One-click purchase flows

- Progressive web app performance

Context-Aware Testing:

- Location-based experiences

- Time-sensitive offers

- Situational content optimization

Getting Started with A/B Testing Today

Immediate Action Steps

- Audit your current conversion funnel and identify bottlenecks (use our conversion funnel analysis template)

- Choose an A/B testing tool that fits your budget and technical requirements

- Start with high-traffic, high-impact pages like your homepage or main landing page

- Create your first test hypothesis focusing on headlines or call-to-action buttons

- Set up proper analytics tracking to measure results accurately

Learn more about setting up Google Analytics 4 for proper tracking.

Building a Testing Culture

Team Involvement:

- Train marketing teams on testing methodology

- Involve designers in creating test variations

- Educate stakeholders on statistical significance

- Create testing calendars and regular review processes

Documentation and Learning:

- Document all tests with hypotheses, results, and learnings

- Share results across the organization

- Build a knowledge base of what works for your audience

- Regular training on new testing techniques and tools

Conclusion: The Competitive Advantage of Data-Driven Optimization

A/B testing isn’t just a marketing tactic—it’s a competitive advantage that compounds over time. Companies that systematically test and optimize their digital experiences see dramatic improvements in:

- Conversion rates (15-25% average improvement)

- Customer lifetime value (10-30% increase)

- Return on ad spend (20-40% improvement)

- User satisfaction and retention

The businesses that will dominate in 2025 and beyond are those that make decisions based on data, not opinions. Split testing provides the framework to turn your website into a revenue-generating machine through systematic, scientific optimization.

Start small, test consistently, and let the data guide your decisions. Your competitors are either already doing this, or they’re giving you the opportunity to gain a significant advantage.

For more advanced strategies, check out our comprehensive CRO guide and digital marketing resources.

Answer to Your Questions

What is the difference between A/B testing and multivariate testing?

A/B testing compares two versions of a single element, while multivariate testing tests multiple elements simultaneously. A/B testing is simpler and requires less traffic, while multivariate testing can identify interaction effects between different elements.

How long should I run an A/B test?

Run tests for at least 1-2 weeks and until you reach statistical significance (typically 95% confidence). The exact duration depends on your traffic volume and conversion rates. High-traffic sites might get results in days, while low-traffic sites might need months.

What sample size do I need for reliable A/B test results?

Sample size depends on your current conversion rate and the improvement you want to detect. Generally, you need 1,000-5,000 visitors per variation for most tests. Use online calculators to determine exact requirements for your situation.

Can I run multiple A/B tests simultaneously?

Yes, but ensure tests don’t interfere with each other. Test different page elements or different pages entirely. Avoid testing overlapping elements (like two different headline tests on the same page) as this can skew results.

What should I do if my A/B test shows no significant difference?

No result is still a result. It means your change didn’t impact user behavior significantly. Document the learning, generate new hypotheses, and test more dramatic changes. Sometimes, smaller changes require larger sample sizes to detect differences.

Ready to start optimizing your conversion rates through A/B testing? Contact our CRO experts for a free consultation or download our free A/B testing checklist and calculator to begin your data-driven optimization journey today.

Ready to Elevate Your Website’s Performance?

Stop leaving valuable conversions on the table. This happens due to technical bottlenecks or testing limitations. BrillMark provides the specialized A/B test development expertise you need. It transforms your website into a high-performing conversion engine. Whether you need to hire A/B testing developer talent for a specific project or seek ongoing A/B testing services, we are here to help. Our skilled experimentation developers are ready to implement robust, reliable, and insightful tests. These tests deliver measurable results and fuel your growth. Learn more about our comprehensive A/B Test Development services.

👉 Contact BrillMark today to discuss your A/B test development needs. Let's build a winning experimentation program together!